2025

SpeakEasy: Voice-Driven AI for Inclusive XR

Michael Chaves

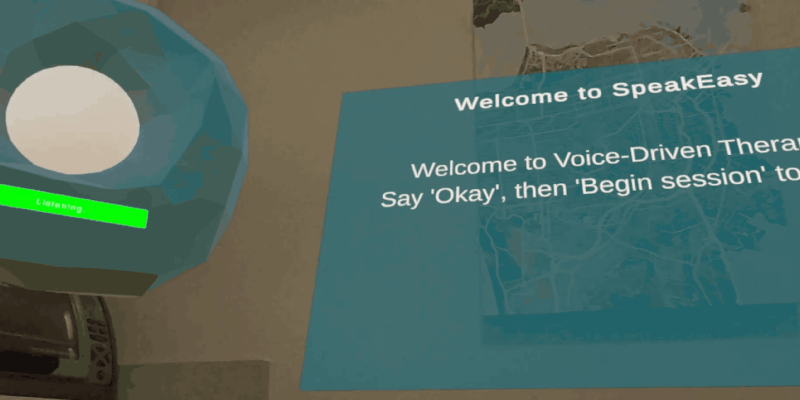

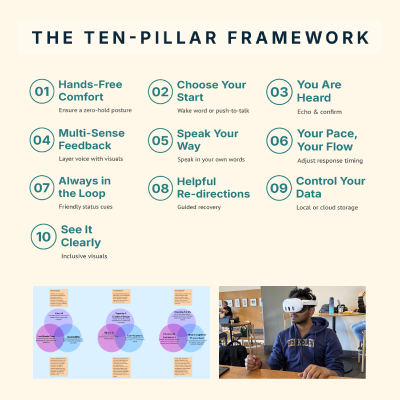

This thesis explores how voice-driven artificial intelligence can make extended reality (XR) more inclusive for users with limited mobility. Recognizing that most XR systems still depend on handheld controllers, SpeakEasy investigates how natural speech can serve as a primary mode of interaction—reducing physical barriers and fostering emotional ease. Through literature review, participatory co-design sessions, and iterative prototyping, the project developed a Ten-Pillar Framework for Accessible Voice Interaction in Spatial Computing, addressing factors such as feedback clarity, user autonomy, and ethical data control.

The final SpeakEasy prototype demonstrates hands-free, voice-based navigation in a

mixed-reality wellness environment, enabling users to complete tasks, receive adaptive

feedback, and engage in guided experiences using only speech. User testing showed

high satisfaction and strong usability, suggesting that natural language and real-time

AI personalization can make immersive technologies more intuitive and equitable. By

centering accessibility from the start, SpeakEasy offers designers a practical roadmap

toward XR experiences that welcome more bodies, voices, and perspectives.