MATH 251 Statistical and Machine Learning Classification

Fall 2022, San Jose State University

Course information [syllabus]

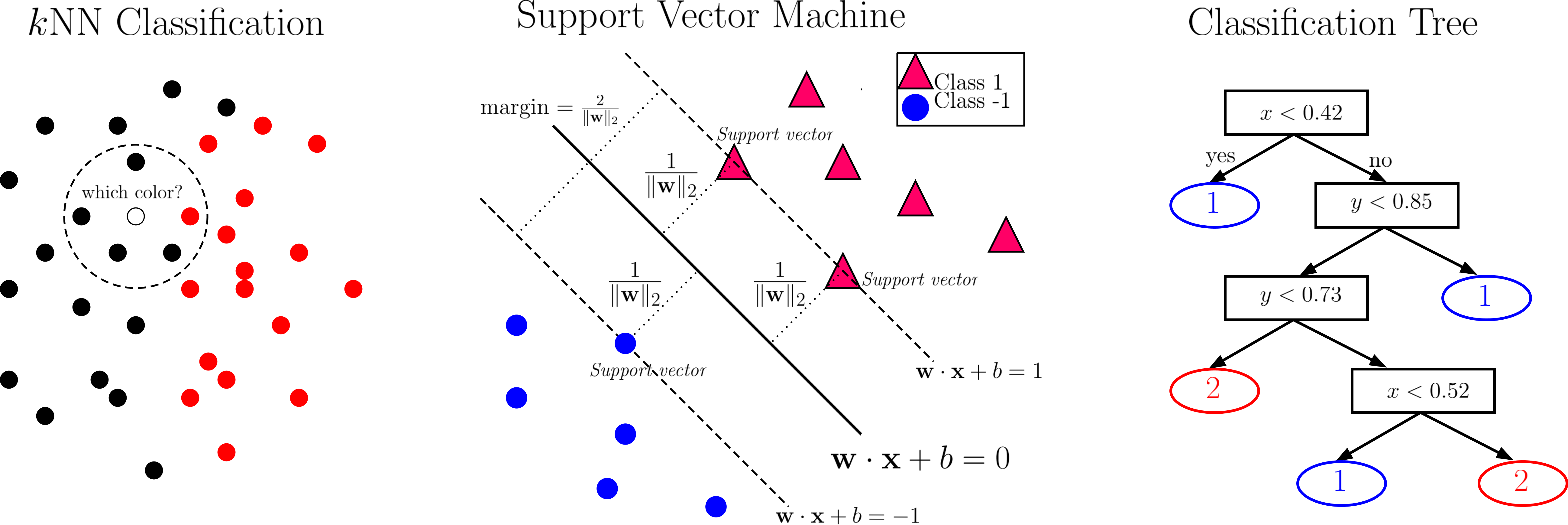

This is a graduate-level course on the machine learning branch of classification, covering the following topics:

- Instance-based Methods

- Discriminant Analysis

- Logistic Regression

- Support Vector Machine

- Kernel Methods

- Ensemble Methods

- Neural Networks and Deep Learning

all based on the benchmark dataset of MNIST Handwritten Digits. Such a teaching strategy was partly inspired by Michael Nielsen's free online book - Neural Networks and Deep Learning, which notes explicitly that this dataset hits a ``sweet spot'' - it is challenging, but ``not so difficult as to require an extremely complicated solution, or tremendous computational power''. In addition, the digit recognition problem is very easy to understand, yet practically important.

Prerequisites: Math 164 Mathematical Statistics and Math 250 Mathematical Data Visualization

Technology requirements:

- Canvas: Assignments and their grades will be posted in Canvas (accessible also via http://one.sjsu.edu/).

- Piazza: The class will use Piazza as the bulletin board. Please post all course-related questions there.

- Computing: Students can choose either or both of Matlab and Python as their programming language(s).

Recommended readings:

- James, Witten, Hastie and Tibshirani (2017), “An Introduction to Statistical Learning with Applications in R”, Springer

- Hastie, Tibshirani, and Friedman (2009), “The Elements of Statistical Learning: Data Mining, Inference, and Prediction”, Springer-Verlag

- Nielson (2015), “Neural Networks and Deep Learning”, Determination Press

- Goodfellow, Bengio, and Courville (2016), “Deep Learning”, MIT Press

Course progress

Updated slides will be continuously posted below (those in light color are from Fall 2020 and will be updated). Please download a new copy of the new slides before each class (remember to refresh your browser).

| Dates | Lecture Slides | Further Reading |

|---|---|---|

| 8/23 | Course introduction [slides] |

Chapters 1 and 2 of recommended reading 2 |

| 8/25 | Instance-based classifiers [slides] |

Sections 2.2.3 and 5.1 of recommended reading 1 |

| 9/1 | Dimension reduction for classification [slides] | |

| 9/6 | Evaluation criteria [slides] | Yining Chen's slides |

| 9/8 | Bayes classifiers [slides] | Section 4.4 of recommended reading 1 |

| 9/20 | Logistic regression [slides] | Section 4.3 of recommended reading 1 |

| 10/11 | Support vector machine [slides] | [Chapter 9 of recommended reading 1] [Lagrange Dual] |

| 11/1 | Ensemble learning [slides] |

[Trevor Hastie's slides] [Adele Cutler's lecture] [Chapter 8 of recommended reading 1] |

| 11/15 | Neural networks [slides] | [Michael Nielsen’s book] |

| 12/1 | Introduction to deep learning resources [slides] | [MIT 6.S191 course page] [Standford CS 231n course page] |

Additional learning resources

Useful course websites

- CS9840a Learning and Computer Vision at University of Western Ontario

- CS 229 Machine Learning at Standford University

- CSL 864 - Special Topics in AI: Classification at Microsoft

- CS4/5780: Machine Learning for Intelligent Systems at Cornell

- CS7616 - Pattern Recognition at Georgia Tech

- MIT 6.S191 Introduction to Deep Learning

Data sets

- USPS Zip Code Data

- MNIST handwritten digits

- Fashion MNIST

- Extended Yale Face Database B

- UCI Machine Learning Repository

- LibSVM data sets

- Oxford Flowers Category Datasets

Instructor feedback

Feedback at any time of the semester is encouraged and greatly appreciated, and will be seriously considered by the instructor for improving the course experience for both you and your classmates. Please submit your anonymous feedback through this page.